|

| Photo by Christian Nielsen on Unsplash |

One of the things that every modern website needs is caching. After all, we don't want to be alerted at 2AM being informed that our services are down because we had a spike in usage which our databases couldn't handle.

- Scaffold an ASP.NET Core website

- Implement a catalog service using MongoDB

- Implement distributed caching using Redis

- Run our dependencies using Docker Compose

- Setup Redis Commander and Mongo Express to view/manage our services

Setting up an ASP.NET Core website

Let's quickly scaffold an ASP.NET Core website using the command line with:"ConnectionString": "mongodb://localhost:27017",

"Db": "catalog",

"Collection": "products"

},

"Redis": {

"Configuration": "localhost",

"InstanceName": "web"

}

Setting up dependencies

Let's now setup our dependencies: Redis, MongoDB and the management interfaces Redis Commander and Mongo Express. Despite sounding complicated, it's actually very simple if we use the right tools: Docker and Docker Compose.Docker Compose 101

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. See the list of features.

services:

# we'll add our services on the next steps

Configuring our MongoDB instance

image: mongo

environment:

# MONGO_INITDB_ROOT_USERNAME: root

# MONGO_INITDB_ROOT_PASSWORD: todo

MONGO_INITDB_DATABASE: catalog

volumes:

- .db.js:/docker-entrypoint-initdb.d/db.js:ro

expose:

- "27017" ports:

- "3301:27017"

Configuring our Redis instance

As with MongoDB, let's now setup our Redis cache. Paste this at the bottom of your docker-compose.yml file:image: redis:6-alpine

expose:

- "6379"

ports:

- "6379:6379"

Configuring the Management interfaces

Let's now setup management interfaces for Redis - Redis Commander and Mongo - Mongo Express to access our resources (I'll show later how do you use them). Again, paste the below on your docker-compose.yml file:mongo-express:

image: mongo-express

restart: always

ports:

- "8011:8081"

environment:

- ME_CONFIG_MONGODB_SERVER=catalog-db

# MONGO_INITDB_ROOT_USERNAME: root

# MONGO_INITDB_ROOT_PASSWORD: todo

depends_on:

- catalog-db

# Redis Commander: tool to manage our Redis container from localhost

redis-commander:

image: rediscommander/redis-commander:latest

environment:

- REDIS_HOSTS=redis

ports:

- "8013:8081"

depends_on:

- redis

Querying Catalog Data

{

Task<IList<Category>> GetCategories();

Task<Category> GetCategory(string slug);

Task<Product> GetProduct(string slug);

Task<IList<Product>> GetProductsByCategory(string slug);

}

{

var c = _db.GetCollection<Category>("categories");

return (await c.FindAsync(new BsonDocument())).ToList();

}

Caching Catalog Data

- setting up Redis with distributed caching

- implementing a Service class and

- adding the caching logic to the service class.

Setting up the Redis Initialization

{

o.Configuration = cfg.Redis.Configuration;

o.InstanceName = cfg.Redis.InstanceName;

});

Implementing a Service Class

ICatalogRepository repo,

IDistributedCache cache)

{

_repo = repo;

_cache = cache;

}

Adding the caching logic

{

return await GetFromCache<IList<Category>>(

"categories",

"*",

async () => await _repo.GetCategories());

}

string key,

string val,

Func<Task<object>> func)

{

var cacheKey = string.Format(_keyFmt, key, val);

var data = await _cache.GetStringAsync(cacheKey);

if (string.IsNullOrEmpty(data))

{

data = JsonConvert.SerializeObject(await func());

await _cache.SetStringAsync(

cacheKey,

data);

}

return JsonConvert.

DeserializeObject<TResult>(data);

}

{

_svc = svc;

}

Management Interfaces

Accessing Mongo Express

Accessing Redis Commander

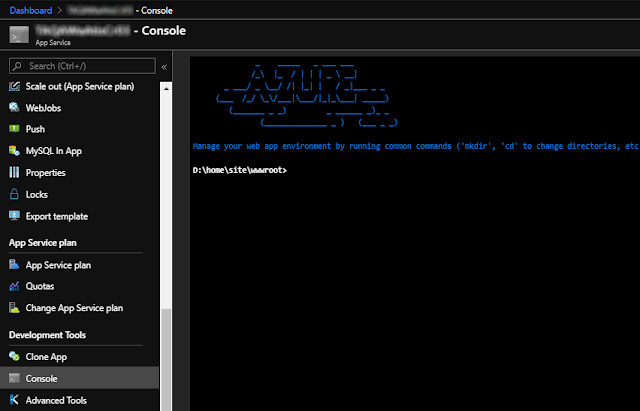

Running the Services

Final Thoughts

On this article we reviewed how to use Redis, a super-fast key-value document database in front of MongoDB database serving as a distributed service. On this example we still leveraged Docker and Docker Compose to simplify the setup and the initialization of our project so we could get our application running and test it as quickly as possible.

Redis is one of the world's most used and loved databases and a very common option for caching. I hope you also realized how Docker and Docker Compose help developers by simplifying rebuilding complex environments like this.

Source Code

References

- Distributed caching

- Overview of Docker Compose

- Dependency injection in ASP.NET Core | Microsoft Docs

- Repository Pattern

See Also

- Microservices in ASP.NET

- My journey to 1 million articles read

- Adding Application Insights to your ASP.NET Core website

- Async Request/Response with MassTransit, RabbitMQ, Docker and .NET core

- Deploying Docker images to Azure App Services

- Continuous Integration with Azure App Services and Docker Containers

- Configuration in .NET Core console applications

- Building and Hosting Docker images on GitHub with GitHub Actions

- Hosting Docker images on GitHub

- How to push Docker images to ACR - Azure Container Registry

- Send emails from ASP.NET Core websites using SendGrid and Azure

- How to build and run ASP.NET Core apps on Linux