|

| Photo by Ante Hamersmit on Unsplash |

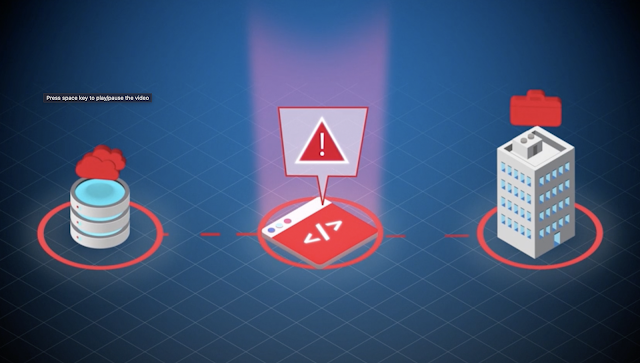

Cyber threat is a trend that unfortunately will not go away any time soon. In fact, it will just keep growing. As as developers, it's our duty to build solutions that are reliable and resistant to attacks, which is a complex undertaking.

Fortunately, the OWASP project provides a lot of information on how to secure applications. It's available for free, and is created and maintained by security experts. So let's learn more about it.

What is OWASP

- O – Open

- W – Web

- A – Application

- S – Security

- P – Project

The Open Web Application Security Project is an online community that produces freely-available articles, methodologies, documentation, tools, and technologies in the field of web application security.

OWASP is non-profit organization community of international security researchers and experts dedicated to improving the security of software, with a especial focus on AppSec (application security).

What it offers

Contrary to what you might think, OWASP is not only about documentation. Here are some highlights of what the project offers:

- Wide range of resources to help organizations mitigate security threats and reduce their exposure.

- Extensive documentation on cybersecurity practices

- Tooling to learn, test and validate different aspects of security

- Resources that help identify and mitigate security vulnerabilities in their web applications and APIs.

- OWASP Top Ten (ten most popular threats in AppSec)

- OWASP Projects (extense and diverse compilation of projects and tools, as we’ll see)

- Extensive technical documentation

- Chapters (community for application security professionals around the world)

- Conferences

- Web Application Security Testing Guidelines (WSTG)

- Education and Training

- Industry Reports

Let's learn more about them.

When, How and Why leverage OWASP

To keep it simple, you should use OWASP whenever you are building any application (client-facing or not) that interacts with data and is used by users (in other words, for most projects deployed in production).

More importantly, you should leverage OWASP because:

- Security and AppSec are HARD!

- Security is a moving target

- It offers a collection of best practices from security experts

- It is continuously updated to cover most popular attacks in AppSec

- Btw, did I mention that security is HARD?

Don’t implement security related features “your way”. Most likely it is not secure enough. Leverage well established patterns such as those provided by OWASP.

Flagship Projects

So let's take a look at some flagship projects.

OWASP Top Ten

One of OWASP’s most popular projects, OWASP Top 10 is a reference standard for the most critical web application security risks. The community regularly updates the list with the ten most critical (and popular) web application security risks.

Active for over 20 years, the project receives contribution from the international community of security experts and researchers. One of its benefits is to bring awareness to the most critical attacks, as well as helping developers and security professionals prioritize their efforts in securing web applications.

Here are is the top 10 attacks in 2021:

|

| OWASP Top 10 2021. Source: OWASP |

OWASP Cheat Sheet Series

Another essential resource for building secure web applications, the OWASP Cheat Sheet Series provides easily accessible practice guides for application developers and defenders to follow.

The project offers more than 80 cheat sheets and security best practice in form of guides for application developers and defenders to follow.

You should leverage it as it helps developers and security professionals prioritize their efforts in securing web application.

OWASP Dependency-Check

OWASP Dependency-Check is a Software Composition Analysis (SCA) tool suite that identifies project dependencies and checks if there are any known, publicly disclosed, vulnerabilities.

OWASP Juice Shop

OWASP Juice Shop is a very sophisticated (and insecure) web application for security trainings. Also great voluntary guinea pig for your security tools and DevSecOps pipelines!

Getting started with Juice Shop is easy! Check this GitHub page for more information.

OWASP Mobile Application Security

The OWASP Mobile Application Security project offers security standards for mobile apps and a comprehensive testing guide that covers the processes, techniques, and tools used during a mobile application security assessment.

OWASP Web Security Testing Guide

The OWASP Web Security Testing Guide project produces the premier cybersecurity testing resource for web application developers and security professionals. A PDF is available for free on from GitHub.

Some highlights of WSTG:

- Fantastic guide to testing the security of web applications and web services.

- Created by security professionals and dedicated volunteers

- Framework of best practices used by penetration testers all over the world.

- 450+ pages of AppSec!

OWASP ZAP

One of my favourite ones, OWASP ZAP is the world’s most widely used web app scanner. Free and open source. Actively maintained by a dedicated international team of volunteers. ZAP is a free alternative to the very popular (and excellent) Burp Suite.

Some of the features available on OWASP ZAP:

- Automated Scanning

- Manual Testing

- Spidering and Crawling

- Active and Passive Scanning

- Alerts and Reporting

- Session Management

- Fuzzer

- Authentication Support

- Plug-in Support

- WebSocket Testing

- Automation and Integration

- Community and Updates

- Multi-Platform Support

OWASP Amass

The OWASP Amass tool Performs network mapping of attack surfaces and external asset discovery using open source information gathering and active reconnaissance techniques..

Conclusion

As cyber threats grow, developers should protect their applications from increasingly complex and sophisticated attacks. For that, OWASP is an essential project to know, study and use.

Hope it helps.