|

| Photo by Hugo Jehanne on Unsplash |

On this third article about Application Insights, we will review how to create custom alerts that trigger based on telemetry emitted by our applications. Creating Alerts on Azure is simple, cheap, fast and adds a lot of value to our operations.

Azure Alerts

But what are Azure Alerts? According to Microsoft:Azure Alerts proactively notify you when important conditions are found in your monitoring data. They allow you to identify and address issues before the users of your system notice them.With Azure Alerts we can create custom alerts based on metrics or logs using as data sources:

- Metric values

- Log search queries

- Activity log events

- Health of the underlying Azure platform

- Tests for website availability

- and more...

Customization

The level of customization is fantastic. Alert rules can be customized with:- Target Resource: Defines the scope and signals available for alerting. A target can be any Azure resource. For example: a virtual machine, a storage account or an Application Insights resource

- Signal: Emitted by the target resource, can be of the following types: metric, activity log, Application Insights, and log.

- Criteria: A combination of signal and logic. For example, percentage CPU, server response time, result count of a query, etc.

- Alert Name: A specific name for the alert rule configured by the user.

- Alert Description: A description for the alert rule configured by the user.

- Severity: The severity of the alert when the rule is met. Severity can range from 0 to 4 where 0=Critical, 4=Verbose.

- Action: A specific action taken when the alert is fired. For more information, see Action Groups.

Can I use Application Insights data and Azure Alerts?

Application Insights can be used as source for your alerts. Once you have your app hooked with Application Insights, creating an alert is simple. We will review how later on this post.Creating an Alert

Okay so let's jump to the example. In order to understand how that works, we will:- Create and configure an alert in Azure targeting our Application Insights instance;

- Change our application by creating a slow endpoint that will be used on this exercise.

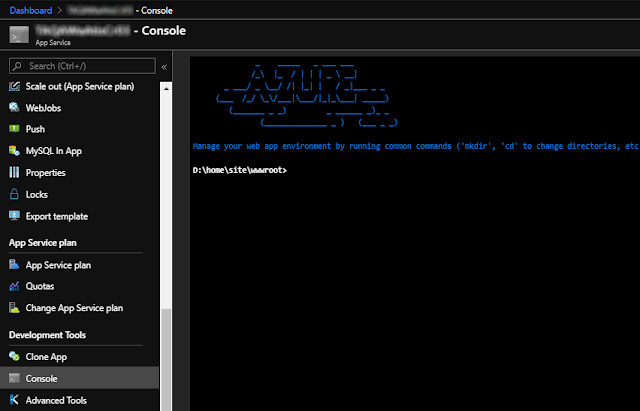

To start, go to the Azure Portal and find the Application Insights instance your app is hooked to and click on the Alerts icon under Monitoring. (Don't know how to do it? Check this article where it's covered in detail).

And click on Manage alert rules and New alert rule to create a new one:

Configuring our alert

You should now be in the Create rule page. Here we will specify a resource, a condition, who should be notified, notes and severity. As previously, I'll use the same aspnet-ai AppInsights instance as previously:Specifying a Condition

For this demo, we will be tracking response time so for the Configure section, select Server response time:Setting Alert logic

On the Alert logic step we specify an operator and a threshold value. I want to track requests that take more than 1s (1000ms), evaluating every minute and aggregating data up to 5 minutes:Action group

On the Add action group tab we specify who should be notified. For now, I'll send it only to myself:Email, SMS and Voice

If you want to be alerted by Email and/or SMS, enter them below. We'll need them to confirm that notifications are sent to our email and phone:Confirming and Reviewing our Alert

After confirming, saving and deploying your alert, you should see a summary like:Testing the Alerts

With our alert deployed, let's do some code. If you want to follow along, download the source for this article and switch to the alerts branch by doing:cd aspnet-ai

git branch alerts

# insert your AppInsights instrumentation key on appSettings.Development.json

dotnet run

Reviewing the Exception in AppInsights

It may take up to 5 minutes to get the notifications. In the meantime, we can explore how AppInsights tracked our exception by going to the Exceptions tab. This is what was captured by default for us:Clicking on the SlowPage link, I can see details about the error:

So let's quickly discuss the above information. I added an exception on that endpoint because I also wanted to highlight that without any extra line of code, the exception was automatically tracked for us. And look how much information we have there for free! Another reason we should use these resources proactively as much as possible.

Getting the Alert

Okay but where's our alert? If you remember, we configured our alerts to track intervals of 5 minutes. That's good for sev3 alerts but probably to much for sev1. So, after those long 5 minutes, you should get an easier alert describing the failure:Reviewing Alerts

After the first alert send, you should now have a consolidate view under your AppInsights instance where you'd be able to view previously send alerts group by severity. You can even click on them to get information and telemetry related to that event. Beautiful!Types of Signals

Before we end, I'd like to show some of the signals that are available for alerts. You can use any of those (plus custom metrics) to create an alert for your cloud service.Conclusion

Application Insights is an excellent tool to monitor, inspect, profile and alert on failures of your cloud resources. And given that it's extremely customizable, it's a must if you're running services on Azure.More about AppInsights

Want to know more about Application Insights? Consider reading the following articles:- Adding Application Insights telemetry to your ASP.NET Core website

- How to suppress Application Insights telemetry

- How to profile ASP.NET apps using Application Insights

References

- Overview of alerting and notification monitoring in Azure

- Create, view and manage Metric Alerts Using Azure Monitor

- Understand how metric alerts work in Azure Monitor

- Create an alert rule

See Also

- Microservices in ASP.NET

- My journey to 1 million articles read

- Creating ASP.NET Core websites with Docker

- Configuration in .NET Core console applications

- Deploying Docker images to Azure App Services

- Send emails from ASP.NET Core websites using SendGrid and Azure

- Hosting NuGet packages on GitHub

- Building and Running ASP.NET Core apps on Linux

- Migrating NServiceBus backends to Azure WebJobs

- Enabling ASP.NET error pages using Azure Serial Console

- Creating a Ubuntu Desktop instance on Azure

- Why I use Fedora Linux

- Windows Subsystem for Linux, the best way to learn Linux on Windows

- How I fell in love with i3