Running CentOS on Azure is great. However, getting there requires some work

because none of the on Azure are available at the moment are free. On this post

we will continue illustrating

why one should use CentOS

by deploying our

own CentOS Stream VM

to Azure.

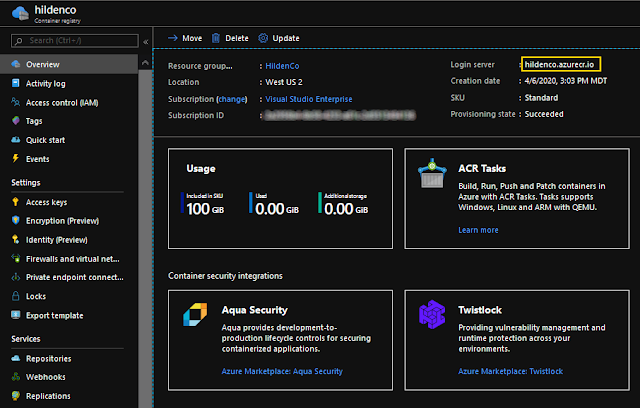

Azure Requirements

Before getting our hands dirty let's review requirements to

run a custom VM on Azure:

- Disk format: at the moment, only fixed VHD is supported;

-

Gen: Azure supports Gen1 (BIOS boot) & Gen2 (UEFI boot) Virtual

machines. Gen1 worked better for me;

- Disk Space: Minimum 7Gb of disk space;

- Partitioning: Use default partitions instead of LVM or raid;

-

Swap: Swap should be disabled as Azure does not support a swap

partition on the OS disk;

-

Virtual Size: All VHDs on Azure must have a virtual size aligned to 1

MB;

-

Supported formats: XFS is now the default file system but ext4 is

still supported.

Installing CentOS on Hyper-V

The first thing that we have to do is to produce a virtual hard disk (VHD) with

a bootable CentOS installed using Hyper-V as explained in detail

on a previous post. Today we'll extend that setup adding what's necessary to run it on Azure. On

this tutorial we will:

-

Download the

CentOS 8 Stream ISO

- Create a virtual hard disk (VHD) in Hyper-V

- Create and configure the VM in Hyper-V

- Install CentOS on the VM by:

- Specifying a software selection

-

Configuring networking (we'll need to install software after the first

boot)

- Configuring partitions on disk

- Creating accounts

- Modify the system to add settings required by Azure

Downloading the CentOS 8 ISO

This should be obvious to most people. In order to get our custom installed on a

VHD with Hyper-V, please go ahead and download

the latest ISO to your computer. We'll need that ISO to load the installer and install it to our VHD. As

previously, we'll use

CentOS Stream.

Creating a Virtual Hard Disk (VHD)

With the ISO downloaded, let's create a virtual hard disk (VHD) on Hyper-V. To

do so, open Hyper-V Manager, click

New -> Hard Disk and choose VHD on

the

Choose Disk Format screen:

Next, on

Choose Disk Type, choose

Fixed size:

In

Configure Disk, set the disk size. In my tests, 6GB was a reasonable

size for a simple server and enough space on the home partition:

Creating the VM

The process to create the Hyper-V VM remains the same. Make sure to

review the previous post

it in detail as I'll only describe the essential bits that required by the Azure

customization here.

Configuring Networking

Make sure that you choose the Default Switch in

Configure Networking:

Connecting the Virtual Hard Disk

On

Connect Virtual Hard Disk, we'll choose

Use an existing virtual hard disk and point it to the one you just

created. This is necessary because Hyper-V auto-creates VHDXs by default while

Azure requires VHDs:

To finish up, validate on

Summary that all looks good and confirm:

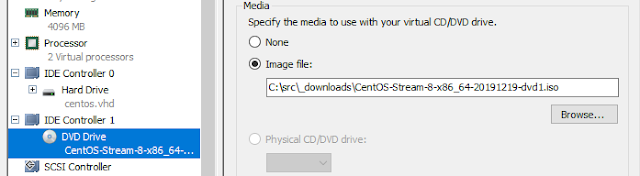

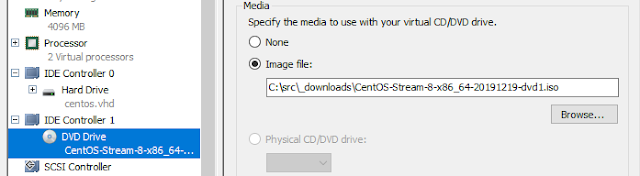

Specifying the ISO

The last thing before starting up the VM is to specify the ISO as a DVD drive.

That's done on Hyper-V manager by selecting

DVD Drive -> Media,

choosing

Image file and locating yours on disk:

I also like to disable checkpoints and unset automatic start/stop actions.

Installing CentOS Stream

After starting the VM in Hyper-V, you should be prompted with the screen below.

Choose

Install CentOS Stream 8-stream:

The installer

After the boot ends, you should be running the installer called

Anaconda. Choose your language and click

Continue:

Installation Summary

On Installation Summary, we'll essentially configure

software selection, network. We'll also need to

setup partitions on the Installation Destination screen.

Software selection

For the software selection, we'll go with

Minimal Install as I don't want

to delay the process by installing what we don't need. The only requirement

we'll need to install is a text editor (I'll be using

Vim, my favourite text editor

but feel free to use yours) so we can make the necessary changes.

During installation, click on

Software Selection and choose

Minimal Install:

Disk Partitioning

Because Azure requires some

special settings

(see requirements above), we'll need to do manual partitioning. But don't be

scared, that shouldn't be complicated. We'll divide our disk in three main

partitions:

-

/boot, 300Mb - where the boot files will be placed (including the

kernel)

-

/, 4Gb - where all the files of the system will be placed (including

software, logs, services and libraries)

- /home, 1Gb - to store user files

-

no swap - we don't need a swap partition as Azure will privision one

for us.

We'll also use

XFS whenever

applicable since it's the default in Azure now.

Choose your disk and click on Custom:

On the

Manual Partitioning screen, click on

Standard Partition:

Add the manual partitions by clicking on the + sign below.

The first to add is

/boot. Enter 300m on the popup so you see:

Add 1GB for

/home:

And the remainder (4.7G) for /:

Confirm to complete:

Networking

Enable networking as we'll need to install our text editor (and if you wish,

update the instance before uploading to Azure):

Start Installation

After all the settings were entered, click continue to proceed. During install,

you will be prompted with a screen similar to:

It's recommended to set a root password and to create an account. I also

recommend checking the

Make this user administrator option as we should

be using root as little as possible:

Before the first boot

Once the installation finishes eject the virtual ISO by goint to Hyper-V

manager, choosing your

VM -> Settings -> DVD Drive and set it to

None -> Apply:

First Boot

After ejecting the DVD and starting the VM you should see the following boot

loader:

Then the following login screen after the boot finishes:

Azure Configuration

We'll now proceed with our Azure configuration. For CentOS 8, the documentation

is

specified here

(although in less detail than on this blog post). Login as

root and

follow the next steps.

Testing the network

If you recall, we chose Minimum Install during the installation. That means that

we don't have a text editor yet so let's proceeed with the installation as we'll

need one to modify our configuration. To confirm our network can access the

internet, type:

ping github.com

No network?

If no network is available, check the status of your connection with:

nmcli con status

If eth0 is down, we should enable eth0 to auto-get an ip from our Hyper-V

Virtual switch with:

nmcli con up eth0

Try pinging again and it should work fine now.

ping github.com

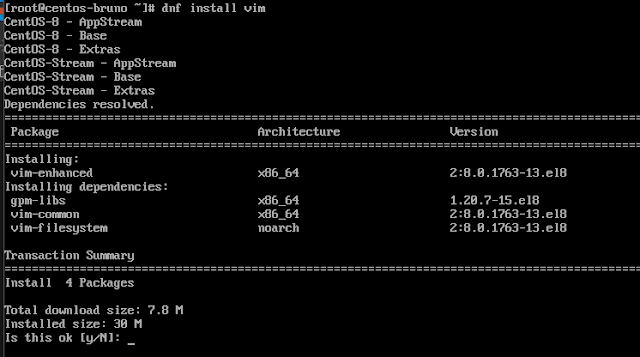

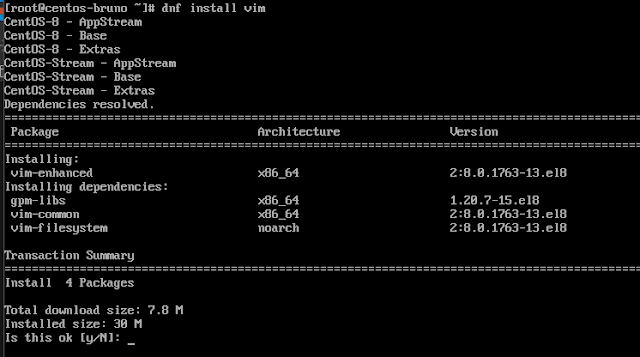

Installing Vim

For editing files I'll install

Vim, my favourite text

editor. That can be done with:

dnf install vim

Whenever possible, I'll be using DNF as it's what I'm used as a Fedora user.

Feel free to use Yum if you prefer it.

Configuring the network

To configure the network, the first step is to create or edit the file

/etc/sysconfig/network

and add the following:

NETWORKING=yes

HOSTNAME=localhost.localdomain

You can run this as a oneliner with:

printf "NETWORKING=yes\nHOSTNAME=localhost.localdomain\n" >>

/etc/sysconfig/network

Create or edit the file

/etc/sysconfig/network-scripts/ifcfg-eth0

and add the following text:

DEVICE=eth0

ONBOOT=yes

BOOTPROTO=dhcp

TYPE=Ethernet

USERCTL=no

PEERDNS=yes

IPV6INIT=no

NM_CONTROLLED=no

Modify udev rules to avoid generating static rules for the Ethernet

interface(s). These rules can cause problems when cloning a virtual machine in

Microsoft Azure or Hyper-V:

ln -s /dev/null /etc/udev/rules.d/75-persistent-net-generator.rules

Modifying GRUB

Next, we'll modify the kernel boot line in your grub configuration to include

additional kernel parameters for Azure. To do this, open

/etc/default/grub

and set GRUB_CMDLINE_LINUX to:

GRUB_CMDLINE_LINUX="rootdelay=300 console=ttyS0 earlyprintk=ttyS0

net.ifnames=0"

Rebuild the grub configuration:

grub2-mkconfig -o /boot/grub2/grub.cfg

Installing the Azure Linux Client

We'll now install the

Azure Linux Client. This package is required by Azure to perform essential tasks on our VM

including provisioning, networking, user management, ssh, swap, diagnostics,

etc. Installing it on CentOS is super simple as the package is available in the

repo:

dnf install WALinuxAgent

Then modify

/etc/waagent.conf

making sure you have:

ResourceDisk.Format=y

ResourceDisk.Filesystem=ext4

ResourceDisk.MountPoint=/mnt/resource

ResourceDisk.EnableSwap=y

ResourceDisk.SwapSizeMB=4096 ## setting swap to 4Gb

To finish off, enable it on boot with:

systemctl enable waagent

Deprovisioning and powering off

Almost there. Just run the following commands to deprovision the virtual machine

and prepare it for Azure with:

waagent -force -deprovision

export HISTSIZE=010i

systemclt poweroff

The machine will shut down. Let's move to the Azure part now.

Uploading virtual hard disk to Azure

Now that our setup is complete, we'll upload our VHD to Azure so we can create

new virtual machines from it. There are two ways to do this:

-

using

AzCopy

(only for the brave)

-

use

Azure Storage Explorer

(recommended)

Unfortunately I can't recommend using AzCopy at the moment as the tool is

full of bugs. It could be that Microsoft is still learning

Go 😉.

Uploading using AzCopy (only for the brave)

To upload our VHD, you should

install AzCopy

and install the

Azure CLI. After the installations finish, close and open all PowerShell/terminal

windows so all the env vars are reloaded.

Login in Azure using the CLI

Let's login to the Azure CLI by typing on a PowerShell window:

az login

Create the disk

In order to create our managed disk, first we need to determine it's actual

size. To get your disk size, type the command below and copy the output as will

be necessary by the upload:

wc -c <file.vhd>

Now, run a command similar to the below replacing items in <> with your

data:

az disk create -n <disk-name> -g <resourcegroup> -l <region>

--for-upload --upload-size-bytes <your-vhd-size> --sku standard_lrs

To upload, first we'll need to generate a SAS token with:

az disk grant-access -n <disk-name> -g <resourcegroup>

--access-level Write --duration-in-seconds 86400

If you got a json response with a "accessSas" token on it, copy that url. We'll

use it to upload our VHD file to Azure using the azcopy tool:

azCopy copy "<path-to-vhd>" "<sas-token>" --blob-type PageBlob

After the upload is complete, and you no longer need to write any more data to

the disk, revoke the SAS. Revoking the SAS will change the state of the managed

disk and allow you to attach the disk to a VM.

az disk revoke-access -n <disk-name> -g <resourcegroup>

Option 2 (Recommended): Using Azure Storage Explorer

I usually don't recommend GUIs but AzCopy is unusable at the moment. Also

uploading via Azure Storage Explorer was way faster and didn't timeout on me 😒.

So install Azure Storage Explorer, open a Blob container, find or create a

folder and click Upload File. Select your VHD and don't forget to set it to

Page Blob:

After completed, you should see your VHD on your remove blog storage folder:

Right-click it -> properties on your disk and copy the Uri:

Next, run the following command to create a VHD from that image (it should be

quick):

az disk create -n <disk-name> -g <resourcegroup> -l <region>

--source <your-vhd-url>

At this point our disk should be recognized by Azure:

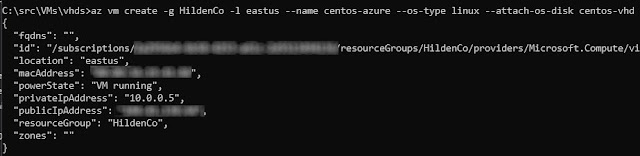

Creating the VM

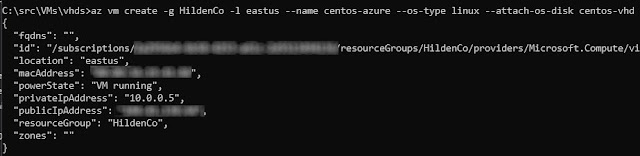

With the disk available, we're ready to create our VM with:

az vm create -g <resourcegroup> -l <region> --name <vmname>

--os-type linux --attach-os-disk <disk-name>

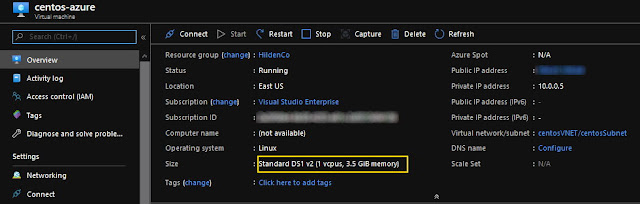

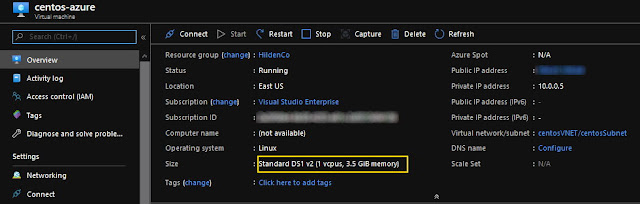

You should now see your VM on Azure as expected:

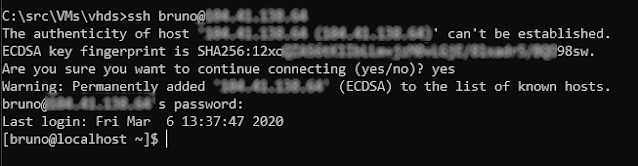

Testing the VM

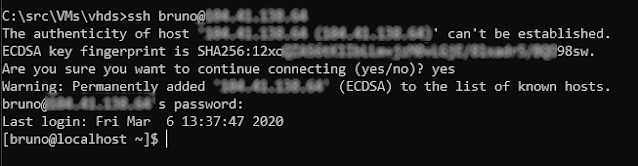

Now the fun part, let's see if this works. If you look carefully the image

above, you'll see our IP listed there. We can try to ssh into it with:

ssh bruno@<ip>

Yay! Our custom CentOS VM is available on Azure and we can access remotely. From

here, it's up to you to install the serices you need. Or, just play a little

with it, tear it down, recreate and so on.

Security Considerations

I dream of the day we no longer have to discuss hardening our VMs on public

cloud providers. Unfortunately we're not there yet. There's a lot of bots

scanning for open ports and vulnerabilities on public IPs so make sure you

take the necessary precautions to secure your SSH service

such as preventing root login, changing the SSH port number and even

banning failed login attempts.

There's also some other measures that we can take on Azure to block that traffic

but that's beyond the scope of this post.

Troubleshooting

Before wrapping up, I'd like to leave a few tips. It's important to remember

that a lot can go wrong here. So far we created a virtual machine on Hyper-V

locally, modified the configuration, uploaded our VHD to a blob storage and ran

some commands using the Azure CLI to create our resourses on Azure. What else

could go wrong? Let's see next.

What's my public ip?

You can get that information on the overview or, from the shell with:

curl ipinfo.me

Can't access the VM via SSH

I had this error too. There are two possible problems: (1) your VM is not

accessing the internet or (2) either a firewall or Azure's Networking is not

properly set. Both should be fine if it's your first time accessing your VM.

The VM isn't starting up

Recheck the steps above. A solution would be running it locally from Hyper-V and

make sure you didn't break it while applying the configuration.

Can't access the internet

This error may happen if your VM is incorrectly configured or if it couldn't get

an IP from

Azure's DHCP server. Try accessing it from the

Serial Console

to get insights about the eth0 ethernet adapter, IP and connection status

with:

ip

a

# check my adapters. Expected: eth0

nmcli device show eth0 # shows the

status of the ethernet connection

nmcli con up

eth0

# starts the connection

The VM won't get an IP

This is probably Azure's fault as the IP should be auto-given to you by their

DHCP servers. Anyhow, we can retry being assigned an ip with:

sudo dhclient

waagent is not working

Using the

Serial Console, check if the agent is working and the status is active (running) with the

command below:

sudo systemctl status waagent

sudo systemctl start waagent

Can't connect to my VM via SSH

This could happen if your instance can't access the internet or if the service

is not running. Try connecting to it via the

Azure Serial Client

and check the previous steps to make sure that the VM can ping an external site.

Also confirm that your public IP is correct. If you did not specify, Azure will

release your previous IPs and is not guaranteed that a new one will be the

same.

Virtual routing problems

If you think that the problem is related to virtual network routing in Azure,

please check these links:

Conclusion

On this post we reviewed in detail how to create a custom CentOS Stream image

and run it on Azure. For this demo we used CentOS, my favorite distro for the

server but most of the information described here should also be useful for

other distributions. We also demoed how to use the Azure CLI and showed some of

the features Azure provides to us.

References

See Also