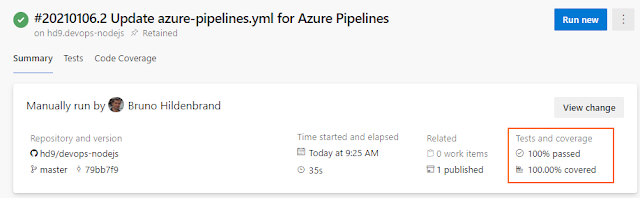

Azure Chaos Studio makes it possible to stress test your applications directly from Azure, and can significantly help in your business continuity and disaster recovery (BCDR) strategies.

|

| Source: Azure |

Azure Chaos Studio is a new service in Azure that allows you to test and improve the reliability of your applications. With it, teams can quickly identify weak spots in their architecture, addressing the enterprise goals of business continuity and disaster recovery (BCDR).

Subjecting applications to real or simulated faults allows observing how applications respond to real-world disruptions.

Running chaos experiments used to be a complex task and required deploying very complex workloads. But with Chaos Studio it just became less complex, due to its availability from the Azure portal.

|

| Source: Azure |

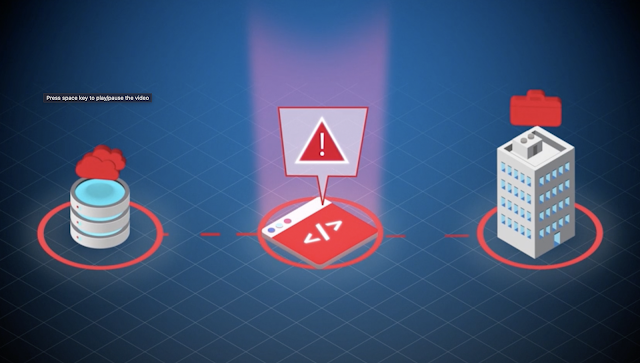

What is Chaos Engineering?

Chaos engineering is a practice that helps teams measure, understand and improve their cloud applications by submitting those application to failures in controlled experiments. This practice helps identifying weak spots in your architecture, which if fixed, increases your service resilience.

Why Chaos Engineering?

The problem that Chaos Studio tries to solve is not new. Disaster recovery and business continuity are usually treated very seriously by organizations as outages can significantly impact reputations, revenues, and much more.

That said, practicing chaos engineering is a must for organizations actively working on business continuity and disaster recovery (BCDR) strategy. These drills ensure that applications can recover quickly and preserve critical data during failures.

Another important factor to consider is high availability (HA). Chaos Engineering helps validating application resilience against regional outages, network configuration errors, high load, and more.

Features

Some of the most interesting features provided by Azure Chaos Studio are:

- Test resilience against real-world incidents, like outages or high CPU utilization

- Reproduce incidents to better understand the failure.

- Ensure that post-incident repairs prevent the incident from recurring.

- Prepare for a major event or season with "game day" load, scale, performance, and resilience validation.

- Do business continuity and disaster recovery (BCDR) drills to ensure that your application can recover quickly and preserve critical data in a disaster.

- Run high availability (HA) drills to test application resilience against region outages, network configuration errors and high stress events.

- Develop application performance benchmarks.

- Plan capacity needs for production environments.

- Run stress tests or load tests.

- Ensure that services migrated from an on-premises or other cloud environment remain resilient to known failures.

- Build confidence in services built on cloud-native architectures.

- Validate that live site tooling, observability data, and on-call processes still work in unexpected conditions.

How to get started

References

See Also

- Microservices in ASP.NET

- My journey to 1 million articles read

- Adding Application Insights to your ASP.NET Core website

- Building and Hosting Docker images on GitHub with GitHub Actions

- Building and testing Node.js apps with Azure DevOps Pipelines

- Hosting Docker images on GitHub

- Send emails from ASP.NET Core websites using SendGrid and Azure

- Async Request/Response with MassTransit, RabbitMQ, Docker and .NET core

- How to build and run ASP.NET Core apps on Linux

- 5 tools for Azure Development on Linux