What's included in the project

- Db management: Redis Commander, Adminer and Mongo Express

- Administrative interfaces: to manage use services such as RabbitMQ Management Console.

- Monitoring: with Grafana, Prometheus and cadvisor.

-

Log indexing (experimental): with the

ELK stack.

Disclaimer

When you create a sample microservice-based application, you need to deal

with complexity and make tough choices. For the

aspnet-microservices

application, I deliberately chose to balance complexity and architecture by

reducing the emphasis on design patterns focusing on the development of

the services themselves. The project was built to serve as an introduction

and a start-point for those looking forward to working of Docker, Compose

and microservices.

Microservices included in this project

So far, the project consists of the following services:

- Web: the frontend for our e-commerce application;

- Catalog: provides catalog information for the web store;

- Newsletter: accepts user emails and stores them in the newsletter database for future use;

- Order: provides order features for the web store;

- Account: provides account services (login, account creation, etc) for the web store;

- Recommendation: provides simple recommendations based on previous purchases;

- Notification: sends email notifications upon certain events in the system;

- Payment: simulates a fake payment store;

- Shipping: simulates a fake shipping store;

Technologies Used

- ASP.NET Core: as the base of our microservices;

-

Docker and

Docker Compose: to build and run containers;

- MySQL: serving as a relational database for some microservices;

- MongoDB: serving as the catalog database for the Catalog microservice;

-

Redis: serving as

distributed caching store for the Web microservice;

- RabbitMQ: serving as the queue/communication layer over which our services will communicate;

- MassTransit: the interface between our apps and RabbitMQ supporting asynchronous communications between them;

- Dapper: lightweight ORM used to simplify interaction with the MySQL database;

-

SendGrid: used to

send emails from our Notification service as described on a previous post;

-

Vue.js and Axios.Js to abstract the frontend of the Web microservice on a simple and powerful JavaScript framework.

Conventions and Design Considerations

- The Web microservice serves as the frontend for our e-commerce application and implements the API Gateway / BFF design patterns routing the requests from the user to other services on an internal Docker network;

-

Web caches catalog data a Redis data store; Feel free to use Redis

Commander to delete cached entries if you wish or need to.

-

Each microservice has its own database isolating its state from

external services. MongoDB and MySQL were chosen as the main

databases due to their popularity.

- All services were implemented as ASP.NET Core webapps exposing the endpoints /help and /ping so they can be inspected from and observed automatically the the running engine.

-

No special logging infrastructure was added. Logs can be easily accessed via

docker logs

or indexed by a different application if you so desire.

- Microservices communicate between themselves via Pub/Sub and asynchronous request/response using MassTransit and RabbitMQ.

- The Notification microservice will eventually send emails. This project was tested with SendGrid but other SMTP servers should work from within/without the containers.

-

Monitoring is experimental and includes Grafana sourcing its data from a

Prometheus backend.

Technical Requirements

To run this project on your machine, please make sure you have installed:

- Docker Desktop (Mac, Windows) or Docker Engine (Linux)

- Docker Compose

- .NET SDK 3.1 or greater

- A git client

- A Linux shell or WSL

If you want to develop/extend/modify it, then I'd suggest you to also have:

- a valid GitHub account

- Visual Studio 2019

- (or) Visual Studio Code

Running the microservices

Initializing the project

Get your copy by cloning the project:

Next open the solution src/AspNetContainers.sln with

Visual Studio 2019. Since code is always the best documentation, the

easiest way to understand the containers and their configurations is

by reading the src/docker-compose.yml file.

Debugging with Visual Studio

Building and debugging with

Visual Studio

2019 is straightforward. Simply open the

AspNetMicroservices.sln solution from the

src folder, build and run the project as debug (F5).

Next, run the dependencies (Redis, MongoDB, RabbitMQ and MySQL) by issuing the below command from the

src folder:

Running the services with Docker Compose

You can also query individual logs for services as usual with docker logs <svc-name>. For example:

info: CatalogSvc.Startup[0]

DB Settings: ConnStr: mongodb://catalog-db:27017, Db: catalog, Collection: products

info: Microsoft.Hosting.Lifetime[0]

Now listening on: http://[::]:80

info: Microsoft.Hosting.Lifetime[0]

Application started. Press Ctrl+C to shut down.

info: Microsoft.Hosting.Lifetime[0]

Hosting environment: Production

info: Microsoft.Hosting.Lifetime[0]

Content root path: /app

Database Initialization

Dockerfiles

Docker Compose

-

docker-compose.yml: this is the main Compose file. Running this file means you won't be able to access some of the

services as they'll not be exposed.

- docker-compose.debug.yml: this is the file you should run if you want to debug the microservices from Visual Studio. This file only contains the dependencies (Redis, MySQL, RabbitMQ, Mongo + admin interfaces) you'll need to use when debugging.

Accessing our App

|

| Our simple e-commerce website. As most things, its beauty is in the details 😊. |

Admin Interfaces

- Grafana: http://localhost:3000/

- MySQL Admin: http://localhost:8010/

- Mongo Express: http://localhost:8011/

- RabbitMQ dashboard: http://localhost:8012/

- Redis Commander: http://localhost:8013/

- The ELK Stack (Experimental): http://localhost:5601/app/kibana#/home

Monitoring

Experimental monitoring is available with Grafana, Prometheus and cadvisor. Open Grafana at http://localhost:3000/ and login with admin | admin, select the Docker dashboard and you should see metrics for the services similar to:

|

| Grafana capturing and emitting telemetry about our microservices. |

Quick Reference

As a summary, the microservices are configured to run at:

- Web: http://localhost:8000

- Catalog: http://localhost:8001

- Newsletter: http://localhost:8002

- Order: http://localhost:8003

- Account: http://localhost:8004

- Recommendation: http://localhost:8005

- Notification: http://localhost:8006

- Payment: http://localhost:8007

- Shipping: http://localhost:8008

The management tools are available on:

- Grafana: http://localhost:3000/

- MySQL Admin: http://localhost:8010/

- Mongo Express: http://localhost:8011/

- RabbitMQ dashboard: http://localhost:8012/

- Redis Commander: http://localhost:8013/

- The ELK Stack (Experimental): http://localhost:5601/app/kibana#/home.

- MySql databases: use Adminer at: http://localhost:8010/, enter the server name (ex. order-db for the order microservice) and use root | todo as username/password.

-

MongoDB: use MongoExpress at:

http://localhost:8011/. No username/password is required.

Final Thoughts

Source Code

References

See Also

- My journey to 1 million articles read

- Deploying Docker images to Azure App Services

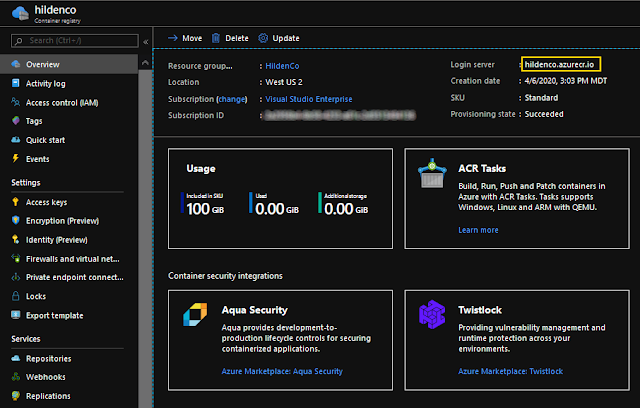

- Pushing Docker images to ACR - Azure Container Registry

- Distributed caching in ASP.NET Core using Redis, MongoDB and Docker

- Async Request/Response with MassTransit, RabbitMQ, Docker and .NET core

- Configuration in .NET Core console applications

- Adding Application Insights telemetry to your ASP.NET Core website

- Building and Hosting Docker images on GitHub with GitHub Actions

- Deploying Docker images to Azure App Services

- Hosting Docker images on GitHub

- How to push Docker images to ACR - Azure Container Registry

- Send emails from ASP.NET Core websites using SendGrid and Azure

- Sam Newman: Monolith to Microservices

- github.com/dotnet-architecture/eShopOnContainers