Fedora Linux is a

fantastic operating system. On this post I'd like to explore the reasons

why I use and recommend Fedora as my daily driver, and

present you with some of the reasons why I think it could be your next (and last) Linux

distribution.

It's 2023 and there are endless praises to Fedora in YouTube. TL;DR: Since

this post was written (7 years ago), Fedora became the most recommended Linux

distro for users that want the best Linux experience. Hope you enjoy the

article!

My personal journey

Everyone has their own Linux journey. In the beginning it's common for people

to try out many distributions until they settle down to one they're

comfortable with. In the end, it's all about trade offs. So let let me tell

you mine and how I got to choosing Fedora as my main

Linux Distribution.

I always used Linux... in dual-boot mode. My journey with it started two

decades ago when I was introduced to

Slackware Linux. What

I loved in Slackware was the level of granularity in which I could tweak my

system. But soon I realized that Slackware required a lot of hands on and

quickly moved to Red Hat Linux (before

RHEL) which provided a more streamlined experience with its package-based system

based on yum/RPMs.

Since then I've tried (in that order):

Mandriva,

Fedora Core, OpenSuse and more

recently Ubuntu,

Mint,

elementary OS,

Xubuntu,

Lubuntu,

Arch,

Manjaro and

SOLUS. Yes, call me a

distrohopper. Then I decided to retry

Fedora 24

and check how it had progressed. Eight years later, Fedora remains my

primary desktop.

With all that said, let me describe some of the reasons I think you should

consider using Fedora on your desktop.

Why I chose Fedora

So let's explore some honest reasons to use Fedora.

Simplicity

Simplicity definitely comes first. Simplicity is one of the characteristics

that I enjoy the most in Fedora and is the reason I often recommend it to new

users. From

the installation

to the

default UI, everything seems

integrated, accessible, fast and intuitive to use. Plus, the default

GNOME desktop is pretty

solid, its animations are smooth and the performance is really good.

|

|

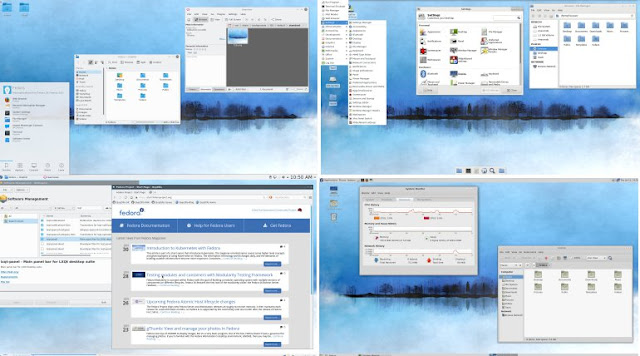

A Fedora workstation running Gnome 3.30 /

Source

|

|

Stability

Fedora is very stable. And differently from what people think, Fedora is not a

beta-testing environment for

RHEL.

Packages are tested on its

development channel, validated and once stable with all dependencies met, released to millions of

users. Be sure that for each update that you get, hundreds of hours of tests

were performed.

Security

Fedora shines on the security aspect. We know that online security is way

more than keeping the system up to date is as important as having a good

anti-virus software, a working firewall and safe web browsing habits. Fedora

counts with a robust

SELinux integration and

given its

First Principle, Fedora users frequent more up to date software and kernel updates than all

other non-rolling release distros.

For those who don't know, SELinux was developed by the NSA and is the standard

access control tool for critical services blocking suspicious behavior by

default.

Privacy

Linux users shouldn't be concerned about privacy. Or

should we? Fedora discloses its

Privacy Policy

publicly and adheres to it as to its

core principles: Freedom, Friends, Features and First. Differently from other distributions,

Fedora never

sent your desktop search results to e-commerce sites, or contains

intrusive telemetry

that

cannot be turned off.

Development-Friendly

Developers are pretty much covered with everything on the development site. You

will find

Go,

Rust Docker, Swift,

Python, NodeJs, Ruby, Java, among others can be found in the repos.

Anything else? You will find it there.

Performance

Fedora performs. My 5 yr-old laptop boots in less than 10 seconds. The performance is mainly

due to more up to date software and to keeping the OS running only with the

necessary resources. Yes there are faster options and yes, Gnome is not

as light as other window managers (but

got really better as of Gnome 3.36) but it's still the best choice for the average user.

Freedom

With Fedora, free alternatives are chosen to proprietary code and content and

limit the effects of proprietary or patent encumbered code on the Project.

Releases that are predictable and include only 100% free software.

Community-Driven

Differently from other distros which are subject to private interests, Fedora is

driven by a

community of dedicated and passionate enthusiasts. All communication is open to the public and everyone is invited to

collaborate! The Fedora communities on the internet are pretty receptive and

you'll always find someone willing to help you.

Reliability

The Fedora project serves as the base for

RHEL and

CentOS. So it needs to be mature, it needs

to be stable. It's a very serious Linux desktop operating system used daily by

millions of users and servers around the world. It needs to be reliable.

Personally, I had

zero problems in my last 5 years doing distribution upgrades.

Cutting-Edge Software

Fedora is frequently mentioned as

the distribution that explores the outer limits of what Linux can do. But that's no news to Fedora users since is the

First foundation

adopted by the project.

Fedora repos usually land

features way earlier than Ubuntu and derivatives (Elementary, Mint and Pop!_OS)

which IMHO is as best as it can be without being a

rolling release. And

remember, you can always add different repos or install software trough

universal Linux packages like

Flatpak.

Frequent Updates

Fedora updates are frequent. Sometimes, multiple times a week I get software

upgrades covering security issues, performance, stability and even Kernel

updates. It's also common to get multiple kernel updates per release. That

usually means running a more mature, stable and secure kernel. It also means

better performance and supported hardware too.

Impressive Software Selection

The default package repository contains all the software you will ever need.

Plus, in Fedora the software can be managed by the powerful

DNF

package manager. For example, this is the number of packages (software) available from the

repository ran from my XFCE instance:

DNF also handles distribution updates which happen twice a year.

Custom Software Repositories

The amount of software available on the official Fedora repo is incredible.

Probably everything that you need, you can find there. But if you're not

covered for the package selection on the free/default repo, you still could

make use of

RPM Fusion to install software that

doesn't adhere to Fedora's requirements.

Not to mention

Copr (Cool

Other Package Repo), a Fedora project to help building and managing

third party package repositories easy. Copr is hosted under the Fedora

Infrastructure and allows developers to create repos so they can be shared

with users.

Excellent Hardware Support

Fedora has excellent support for hardware. I don't have cutting-edge hardware

neither am a gamer so I cannot comment on that but for most people, both the

Fedora installer and the kernel are very good at recognizing and activating

the hardware. That's probably a good reason why most people stop

distrohopping

when they get to Fedora. Most of the issues (including lack of hardware

support) disappear.

Incubator for new features

The Fedora community creates many of the technical features that have made

Linux powerful, flexible, and usable for a wide spectrum of millions of users,

administrators, and developers worldwide. In fact, this is the

Missions and Foundations

of the project:

The Fedora community prefers approaches that benefit the progress of free

software in the future over those that emphasize short term ease of use.

Some of the features developed

with Fedora include:

-

The Linux Kernel -

there are hundreds of kernel hackers worldwide using Fedora. The most

famous of them is

Linus Torwalds, the creator of Linux ("It just works").

-

Wayland - a new display

system replacing the venerable

X.org.

-

XWayland -

extensions to the X server so it can be run as a Wayland protocol.

-

systemd - Linux's

default init system and used on 95% of the modern and most popular

distros.

-

GNOME - a free and

open-source desktop environment for Unix-like operating systems.

-

The GTK toolkit - a

cross-platform widget toolkit for creating graphical user interfaces.

-

PipeWire - a new audio and video

subsystem

- Flatpak - application packaging

-

The cockpit project

- Manager your server in a web browser and perform system tasks with a

mouse.

-

Anaconda installer

- Anaconda is a free and open-source system installer for Linux

distributions used in Fedora, RHEL, CentOS and other Linux

distributions.

-

Podman -

a tool to create and maintain containers.

Buildah

- a tool to create and manage container images

-

Silverblue

- a next-gen

OS for the desktop

-

Fedora CoreOS

- a next-gen cloud appliance.

-

Modularity

- Modularity is a mechanism of making multiple versions of software

available to your system.

Two upgrades per year

Because Fedora releases happen twice a year, you'll get big system updates

(including Gnome, GCC and base libs) twice a year. It's the best way to have

up to date software without the complexities and issues rolling release

distros have.

Upgrading

is as simple as 2 clicks on the Software app or running the below on your

terminal:

sudo dnf upgrade --refresh

sudo dnf install dnf-plugin-system-upgrade

sudo dnf system-upgrade download --releasever=XXX

sudo dnf system-upgrade reboot

Universal Linux Packages

You know you can install software using the package repos. Another option is

using

Snap or

FlatPak. And installation is as simple

as opening

Software ->

Searching and clicking install.

RHEL

Many people ignore this but another strong reason to use Fedora is because

it's

RHEL's upstream. You'll be using

with

Yum/

DNF,

SystemD,

SELinux, and testing

what will be available on the next RHEL, the leading

Linux-based OS on servers. Invaluable knowledge to have.

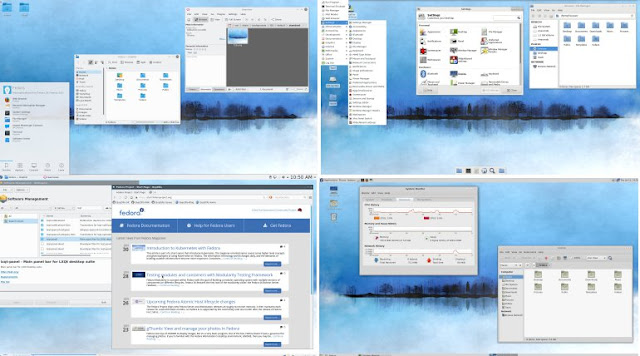

Variants

Don't like

GNOME? Fedora also ships

different

spins

including

KDE,

Xfce,

LXQt,

MATE,

Cinnamon,

LXDE and

others. All available in both 64-bit and 32-bit versions. It has even support

for

ARM and

IoT.

|

|

Fedora in some of its variants

|

You can also find on

Labs,

variants that are target to designers, astronomers, scientists and

musicians.

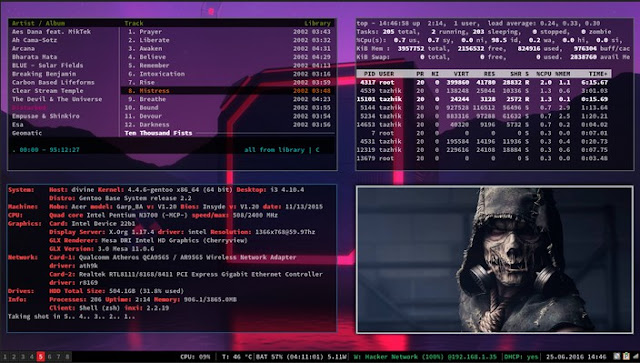

A multitude of options for your desktop

Don't like the default? You can always change. For example, check this post to

learn more about

a multitude of desktops and window managers available on Fedora 31. Among my favorites:

-

i3 (and its Wayland

alternative Sway) - my

personal choice ❤!

-

XFCE - also use on my

cloud instances, including on my Ubuntu instance on azure

-

KDE - an excellent desktop

for your Linux PC

-

GNOME - I also have

it installed at home as a fallback in case my i3 breaks (never happened

😊)

-

And another multitude of options

- counting at 38 alternatives to customize your desktop

Still on

tiling window managers, for a couple of years now I'm using the

i3 window manager (shown on

the screenshot below) and I love it! For more information check

my post on why i3 is awesome, how to install it and why it will change the way you use your Linux

desktop.

So, Fedora is perfect?

Of course not! No software is perfect as no software will ever be bug-free.

However, it's important to consider that open-source operating systems are

extremely complex software bundles composed of thousands of packages

developed by volunteers worldwide.

Fedora provides the best balance in what matters most: open-source software,

up-to-date software and a strong focus on stability, security and privacy.

Plus, you can find on Fedora and its derivatives everything you're looking

for. Need a more stable environment for your server or container? Go with

Fedora Server

or

CentOS. Want to go even more cutting edge on the desktop? Try

Silverblue. Need a lightweight alternative for that old netbook? Go with

Fedora XFCE. Using ARM on a Pi or

on a

Pinebook Pro? Try

Fedora ARM!

Fedora also shines on its community aspect. The community is very open for

onboarding newcomers and volunteers. And as previously mentioned, everyone

is invited to collaborate, even if it's just to write articles for the

Fedora Magazine.

All communication is

shared online

and the teams are structured to help newcomers trough mentoring programs.

Conclusion

Fedora is a polished, modern, stable, secure and privacy-focused GNU/Linux

operating system.

With all its variants, I'm pretty sure there will be something for you too! If you have never used

it, I would suggest that you try it, regardless of your technical background.

If you use another distro, I would kindly recommend that you to

download Fedora, install it on a VM and

give it a try. And don't hesitate to send a big thank you to the

@Fedora community on Twitter.

References

See Also