The RavenDB Cloud may be an excellent choice for developers looking for a SAAS database on the cloud.

In this article we will learn:

- About RavenDB;

- About cloud integrations (AWS, Azure, Google Cloud);

- How to create an account;

- How to deploy you free AWS instance;

- How to manage your server;

- How to interact with it through code;

- Potential risks;

- What's next?

About RavenDB

I’m a big fan of RavenDB. I've been using it on production for the last 5 years and the database has been fast, reliable, secure and given that it provides a robust and friendly C# Api and lots of interesting features, it was definitely a good choice for our product.

If you want to know more about my RavenDB experience, please click here.

RavenDB 4 introduces many welcome enhancements. Below I highlight my favourites:

- Management Studio – much faster, customizeable and intuitive.

- Speed – Raven 4 is faster than 3.5. All Raven 4 tested features (Db imports, queries, patches and custom implementation) showed significant improvements against the previous version.

- Security – the authentication now happens via certificates instead of usernames/passwords and the databse offers encrypted storage and backups out of the box.

- New Server Dashboard – the new dashboard offers a holistic overview of the cluster, nodes and databases deployed on a cloud account.

- Clustering – Raven 4 works on a cluster fashion instead of an instance fashion. This brings better performance, stability and consistency for applications.

- Auto-Backups: you can setup the database to run periodic backups (incremental or not). This feature is also present on the RavenDB helping to reduce the reliance on personalized jobs and/or scripts. Backups can be encrypted and uploaded to different servers including blob storages on AWS and Azure.

- Ongoing Tasks – the new Manage Ongoing Tasks interface simplifies the configuration and deployment of important services such as ETL, Sql Replication and Backups.

- RQL – RQL is the new Raven Query Language - a mix of LINQ and JavaScript. Much clearer and intuitive than Lucene. See the Queries section for more information.

Licensing

Currently, three types of licenses are available: Free, Developer and Production. The main differences are:- Only one free version per cloud account

- The free version runs only on AWS US East 1

- Some features (such as SQL replication) are only available on the Production version

If you would like to test RavenDB, remember that you can also have local database.

Services

Which services are available on the free version? In summary, a free RavenDB Cloud license allows to:- Create a new RavenDB instance

- Manage the RavenDB server and databases

- Configure some aspects of the database (other were not available with the free version)

- Import an existing database

- Query data using a simple console tool

- Test partially the SQL replication feature

- Test partially the backup feature

AWS, Azure and Google Cloud Integration

The RavenDB Cloud can aslo be deployed on AWS and Azure’s most popular regions and they're also working on the Google Cloud. That means the database could sit on the same datacenter as your application reducing the latency between your services.Pricing

The price varies by tier and time utilization. This is the estimated pricing from the Development Tier (Dec 09, 2019):Creating an Account

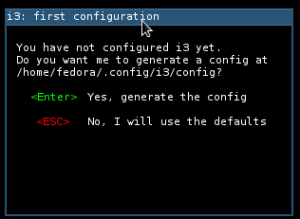

The process to create an account is simple. Just go to cloud.raven.net, click Get started for free:Enter your email and proceed with the setup. You should get an access token to access your created account.

Creating your Instance

To create your database, log in to your account utilizing the email token and click Add Product:Specify the required information:

Aand review your request to proceed with the deployment:

Deployment

With all the above information submitted, the deployment process starts. It took me around 2 minutes to have the new cluster provisioned. Once deployed, you should see it on the Raven Cloud portal:Accessing the Instance

In order to access that cluster, clicking on the Manage button takes us to the new Management Studio also available on RavenDB 4. New users will be required to install a new certificate. Once logged in, here’s what the new RavenDB Studio looks like:Some of the interesting features of the RavenDB Studio are:

- a nice overview of our databases on the right. From there you can quickly view failed indexes, alerts and errors.

- database telemetry including cpu, memory, storage and indexing

- database management

- a global overview of the database cluster

- real-time cluster monitoring

- customizeable

Creating Databases

Let's now create and import databases. The process to create a new database is simple. Click Databases -> New Database:Enter the database name and some other properties and the database is quickly created. The deployment of a new database takes less than 5 seconds. An empty database utilizes aproximately 60 Mb on disk.

Costs

Unfortunately I can't provide an estimate on costs but I'd like to recall that you should consider also consider costs for:- networking: networking costs will vary and likely increase your costs.

- storage: backup costs will also vary and will probably increase your costs.

Replication

Replication is done directly through Raven Studio either on creation or on settings. The database administrator sets how many nodes of the cluster he’d like to use and chooses between dynamic/manual replication and Raven handles all the rest. This is an important feature as it allows your database to be up in case one node within your cluster fails.Importing Databases

I also tested the database important and more importantly, if it would be easy to migrate data between Raven 3.5 and Raven 4. Luckily the process of importing databases didn’t change much and a new RavenDB 4 accepts most of the imported data successfully. This is how the import process looks like:For the record, my cloud instance imported of 1.5 million records in just over 2:40 minutes:

Managing your Server

From the Manage your Server section you'll have access to tools such as cluster, client configuration, logs, certificates, backups, traffic, storage, queries and more. You can see what's available below to manage your cluster:Database Tools

Under Manage Ongoing Tasks you will also find interesting database-specific resources:Backups

Backups are handled directly from Management Portal -> Database -> Settings -> Manage Ongoing Tasks tool:

I was pleasantly surprised that they offer backups to AmazonS3 buckets and Azure blob storage by default:

Once setup, you'll see that the automatic backup runs periodically:

Backup Considerations

Other imporant considerations regarding backups are:- The Free and Production tiers are regularly and automatically backed up.

- You can define your own custom backup tasks, as you would with an on-premises RavenDB server.

- A mandatory-backup task that stores a full backup every 24 hours

- An incremental backup happens every 30 minutes

- Backups created by the mandatory backup routine are stored in a RavenDB Cloud

- You will have no direct access to backups

- You can view and restore them using your portal's Backups tab and the management Studio.

- Mandatory-backup files are kept in RavenDB's own cloud.

- RavenDB offers 1 GB per product per month for free

- The backup storage usage is measured once a day, and you'll be charged each month based on your average daily usage.

External Replication

We can also configure external replication by Selecting a Database / Settings / Manage Ongoing Tasks / Add Task / External Replication. The screenshot below shows the options:SQL Replication

The Raven Cloud also supports SQL replication. Unfortunately this feature was not available on the free version. From what I could test, the feature didn’t change much from Raven 3.5 and runs well.The next step is to write a transformation script. For example:

Scheduled Backups

Scheduled backups can also be customized and are available at Database / Setup / Manage Ongoing Tasks / Add Task:Scaling

Being clustered by default, RavenDB 4 can be easily scaled via the Portal. The documentation describes in detail how it can be configured. Below, a screenshot provided by RavenDB on how it should work (the feature isn't available on the free tier):Security

The RavenDB Cloud offer strong security features including:- Authentication: RavenDB uses X.509 certificate-based authentication. All access happens via certificates, all instances are encrypted using HTTPS / TLS 1.2 / X.509 certificates.

- IP restriction: you can choose which IP addresses your server can be contacted by.

- Database Encryption: implemented at the storage level, with XChaCha20-Poly1305 authenticated encryption using 256 bit keys.

- Encryption at Rest: the raw data is encrypted and unreadable without possession of the secret key.

- Encrypted Backups: your mandatory backup routines produce encrypted backup files.

For more information, please read RavenDB on the Cloud: Security.

Cluster Api

There's also a Cluster API to managing the cluster. It allows your devops team to script database operations including:- Add nodes to the cluster

- Delete nodes from the cluster

- Promote a watcher node

- Demote a watcher node

- Force elections

- Force timeout

- Bootstrap the cluster

For example, we can dynamically add a node to a cluster by running the following PowerShell script:

[Net.ServicePointManager]::SecurityProtocol = [Net.SecurityProtocolType]::Tls12

$clientCert = Get-PfxCertificate -FilePath

Invoke-WebRequest -Method Put -URI "http:///admin/cluster/node?url=&tag=&watcher=&assignedCores= -Certificate $cert"

$clientCert = Get-PfxCertificate -FilePath

<path-to-pfx-cert>Or with the equivalent in cURL:

curl -X PUT http:///admin/cluster/node?url=&tag=&watcher=&assignedCores= --cert

Database Api

Since we’re talking devops, it’s important to note that the client api only manages nodes on the cluster. By itself, that feature still isn’t sufficient to start a new working environment since databases, indexes and data would be required.

In case you're interested, I created a RavenDb.Cloud spike tool to review database operations.

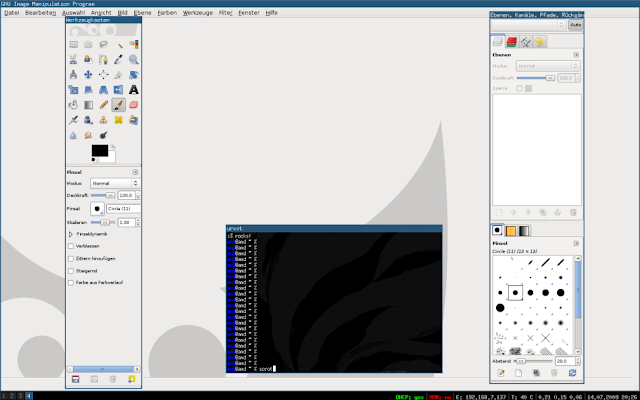

Querying the Cloud Database

A major change happened on Raven 4: RQL replaces Lucene as the default language for queries and patches. If you don’t run RavenDB yet, you shouldn’t be alarmed. But for folks migrating from RavenDB 3.5, that potentially will be a significant impact requiring bigchanges on the code.

The good news is that RQL comes with important changes and multiple improvements on the new Management Studio. The UI is now friendlier,

faster and simpler to use, query and export data.

RQL’s the syntax is a mix of .NET’s LINQ and JavaScript. Overall, it’s elegant, clean and simple to use. It also makes querying and patching the Raven database simpler. However, for users currently relying on Lucene, this may represent a risk as those queries will have to be migrated (and subsequently, extensively tested) to RQL.

Further Reference:

Breaking the language barrier

Querying: RQL - Raven Query Language

Running queries on the Portal

Querying using RQL from is straightforward. .NET developers should recognize the language since it's very similar to LINQ:Customizating Queries

The Studio allows us to choose which columns should be returned by the results table:Patching Data

The patch tool accepts RQL. For example, this is how we apply a simple patch using the new syntax:Managing the database using the C# api

When we consider a database on the cloud we should ask how is the support for automation. RavenDB provides a powerful C# api that I show below some of the operations (source here):Risks

With every new technology, there are risks. It’s important to understand that even if RavenDB is a mature technology (and their developers are bright!), there are risks that should be considered with this and any new platform. I highlight:- Costs: I wasn't able to determine the overall cost mainly because all values provided by RavenDB are estimates. If the costs are an important requirement for the migration, a more in-depth evaluation should be performed.

- Performance: I didn't invest much time testing the performance of the Raven Cloud. The good new is that Raven 4 is much faster than 3.5 and is potentially offered on the same Cloud provider / region as your application.

- Hidden Costs: as previously said, all prices listed are estimates. It’s probable that other costs will be added to your bill at the end of the month.

- RQL – RQL is the new way to run queries against the Raven 4 database. However, due to the amount and complexity of some of our queries relying on Lucene (the old advanced way of querying the Raven database), migrating all the complex queries to RQL will be a challenge in terms of time and testing efforts necessary.

- Major changes on the API: ignore this if you're new to RavenDB. But, if you were using RavenDB 3.5 there aresignificant changes on the RavenDB 4 Api. Broken dependencies will potentially be: business logic (if implicitly coupled to RavenDb 3.5), indexes, tests and tools. Also, Lucene queries, Map-Reduce indexes, patches and logic that contains bulk-insert operations will likely have to be upgraded.

- NServiceBus: the Raven 4 api requires conflicting libraries with NServiceBus. So it may be possible that a RavenDB upgrade will also require you a NServiceBus upgrade.

For Further Investigation

My short experience with the RavenDB Cloud was solid. However I would like to highlight other topics that could potentially be researched in the future:- Full Cost Estimate – all the costs on this post are estimates are subject to variation. Most of these estimates were provided by RavenDB on the Raven Cloud website. It’s highly probable that on a real production environment, costs will be bigger. But, for what the Raven Cloud provide, I still find their prices very attractive.

- Performance Benchmarks – I personally didn’t do any performance benchmark when testing the Raven Cloud. Based on this exercise, I did realize that both the local and the cloud versions or RavenDB 4 showed a good increase in the overall performance.

- Security – No security tests were performed as outside of the scope of the spike. My understanding is that security is way beefier on Raven 4. But how secure is it?

- SQL Integration – The free version doesn’t support sql replication. It’s a very important feature for those that need some sort of reporting. Probably a good reason to go to the dev/prod subscriptions.

- Backup/Restore – The backup/restore feature wasn’t tested because the only available option for the AWS free version was on S3 storage. Worth investigating if considering using the Raven Cloud on production. My experience with a local install of Raven 4 is that it’s reliable and super fast!

- Smuggler – The smuggler tool is available on the Raven 4 Api. I built a simple console tool to manage databases and import/export data. The source code is available here.

- Cluster Api – since the free version does not include clustering, I couldn’t test the Api. However, since the Raven Apis are extensive and well document, I don’t expect any problems with that.

Conclusion

This ends the Raven Cloud evaluation, thanks for reading! Hopefully this quick look on these features helped clarigying what RavenDB offers. For me, RavenDB is a very strong alternative on the NoSql market and its cloud brings significant benefits for teams looking forward to reducing costs.I dare to say that, for all it provides, RavenDB Cloud is a strong contender against MongoDB Atlas, Elastic Search and Azure CosmosDB.

References

- RavenDB Cloud

- RavenDB on the Cloud: Overview

- RavenDB on the Cloud: Security

- Cluster: Cluster API

- Breaking the language barrier

- Querying: RQL - Raven Query Language

- Raven Cloud - Pricing

- List of Differences in Client API between 3.x and 4.0

- Apache Lucene

See Also

- My journey to 1 million articles read

- A simple introduction to RavenDB

- Installing and Running RavenDB on Windows and Linux

- Running RavenDB on Docker

- Patching RavenDB Metadata

- Querying RavenDB Metadata

- Creating ASP.NET Core websites with Docker

- Send emails from ASP.NET Core websites using SendGrid and Azure